Counterfactual Rotation: Dual-Path Attention for Robust Prediction

Extending Transformer Attention with Dual-Path Counterfactuals — Poster by Andre Kramer (andrekramermsc.substack.com).

Column 1: Motivation & Equation

Transformers perform well in-distribution but collapse on OOD inputs.

Negations and counterfactuals expose brittle factual attention.

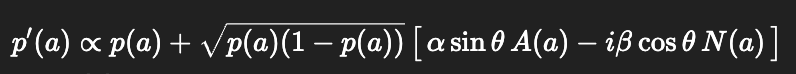

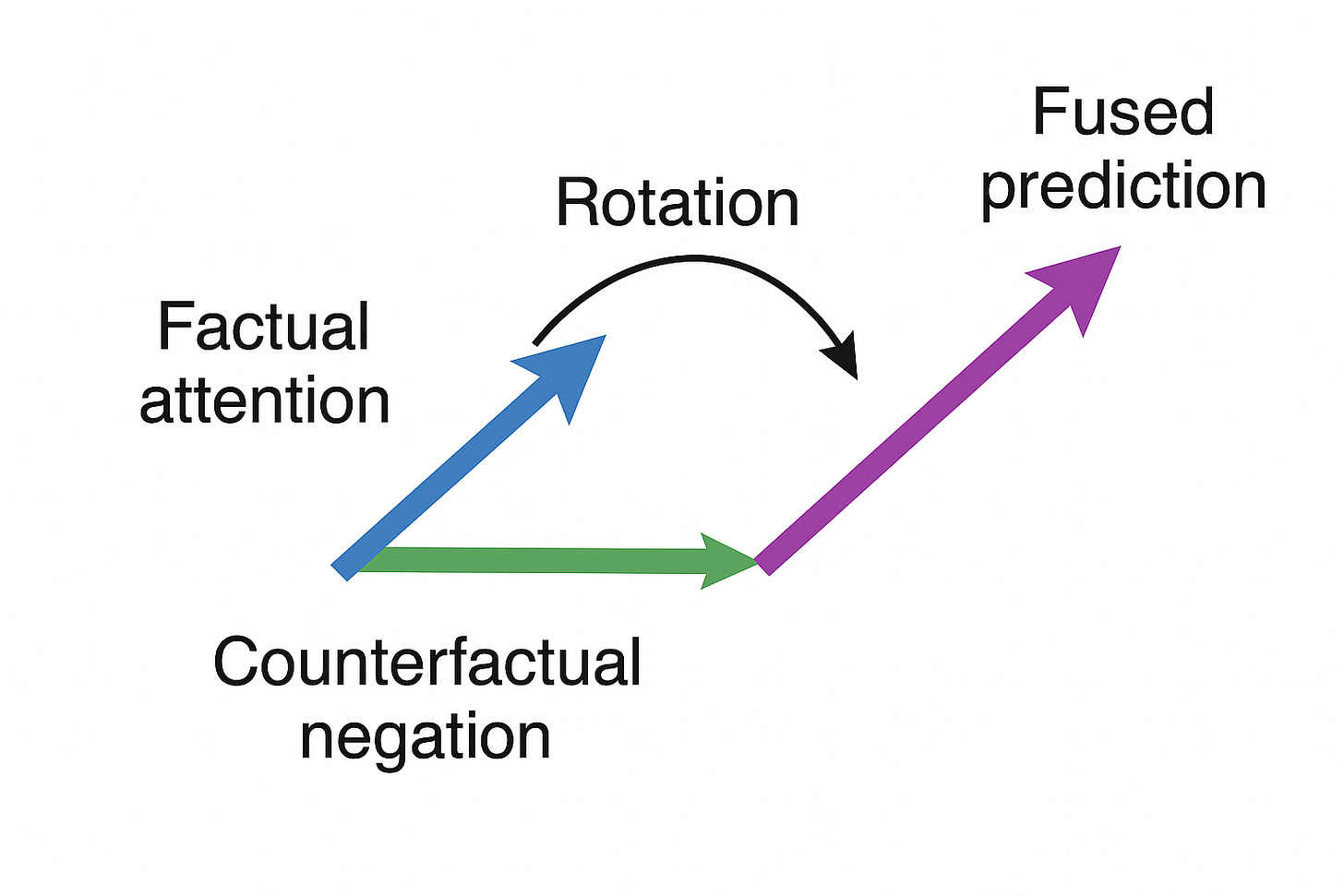

Idea: Rotate probability mass toward counterfactual evidence.

Key equation (architectural inspiration):

p′(a)∝p(a)+sqrt(p(a)(1−p(a))) [ αsinθ A(a)−iβcosθ N(a) ]

A(a): factual attention

N(a): counterfactual negation

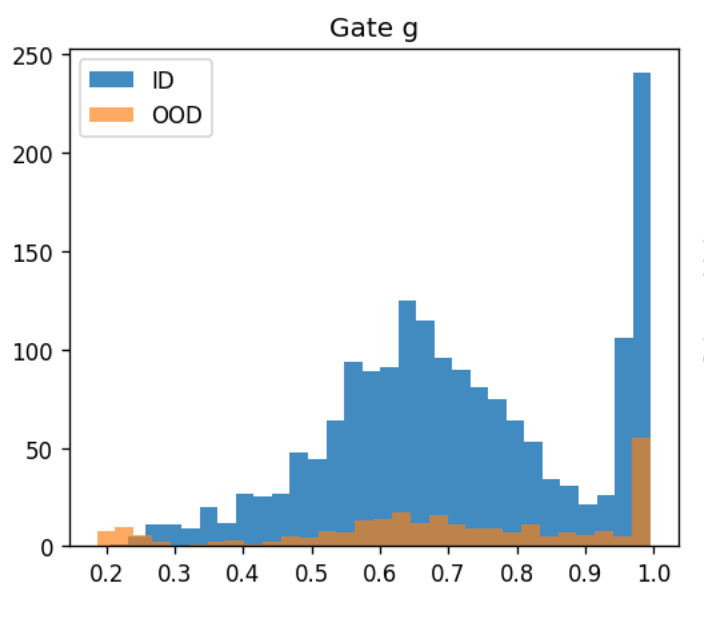

Gate g=sinθ balances experts

Override β=cosθ ensures safe fusion

Column 2: Architecture & Method

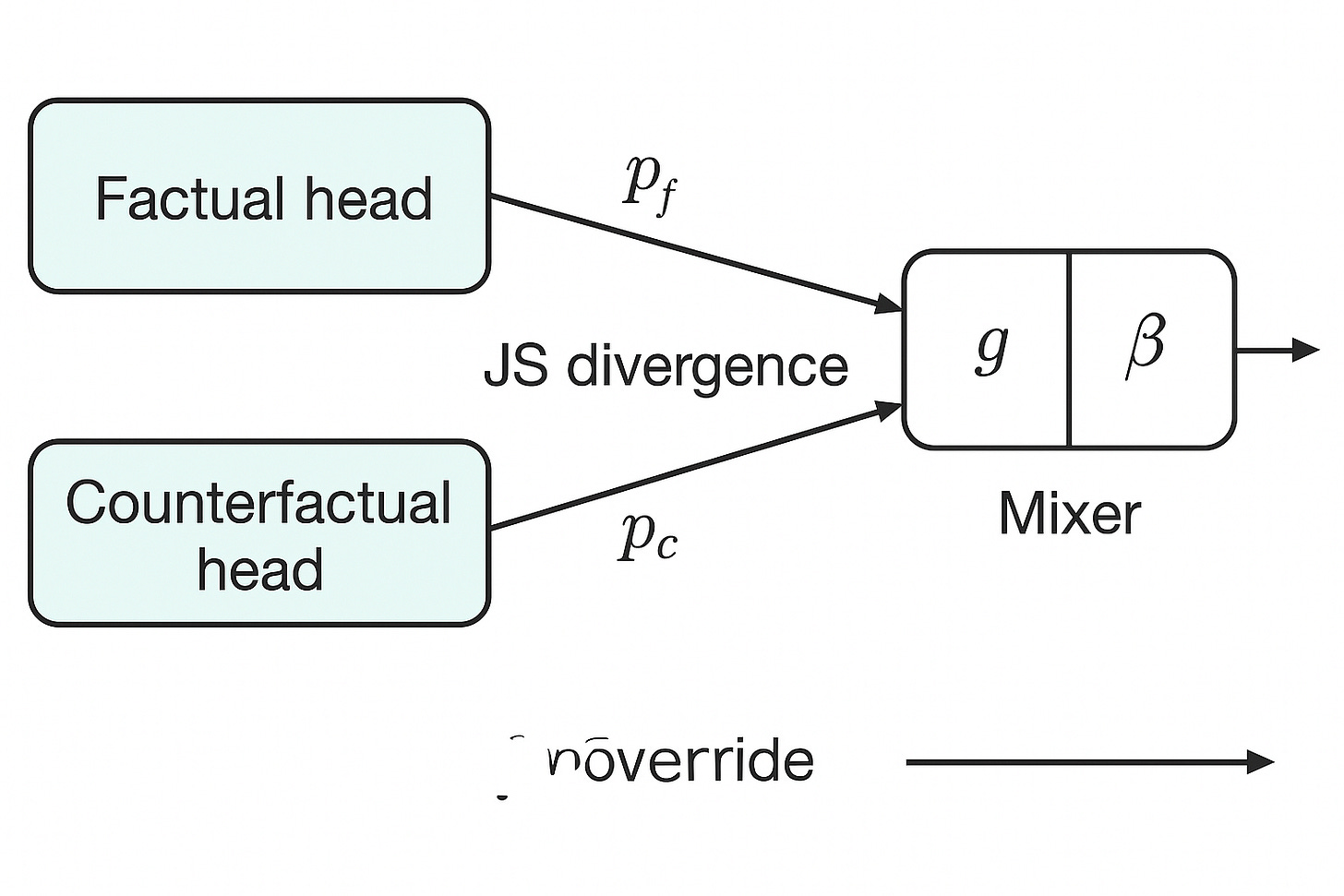

From equation → network

Two experts: factual pf and counterfactual pc.

Gate g controls mixture.

Override β provides safety cap.

JS divergence tracks disagreement (proxy for “rotation angle”).

Training recipe

Counterfactual warm-up

OOD rehearsal with fused supervision

Split calibration (ID: vector scaling; OOD: temp scaling)

Column 3: Results

Headline improvements

ID acc: 0.23 → 0.65

OOD acc: 0.06 → 0.58

Calibration (ECE): stable ~0.12–0.13

Entropy reduced (more confident, but still safe on OOD).

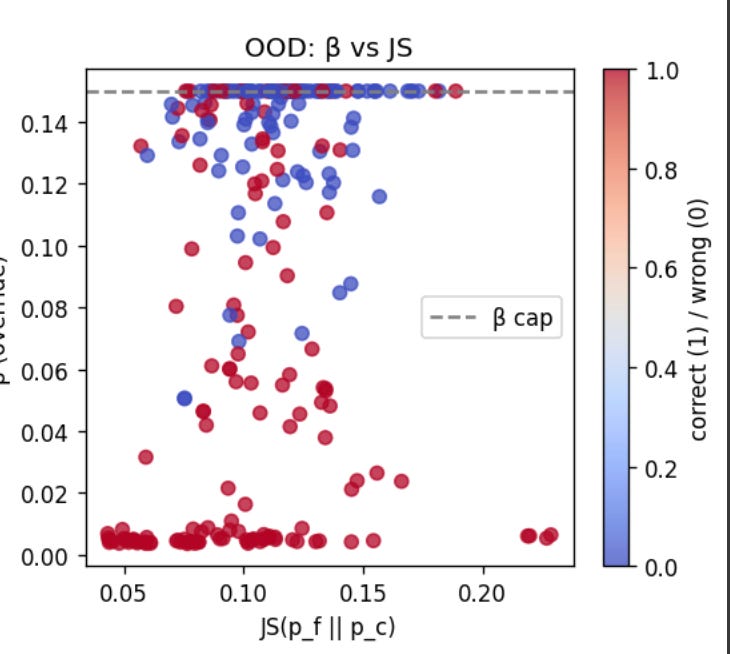

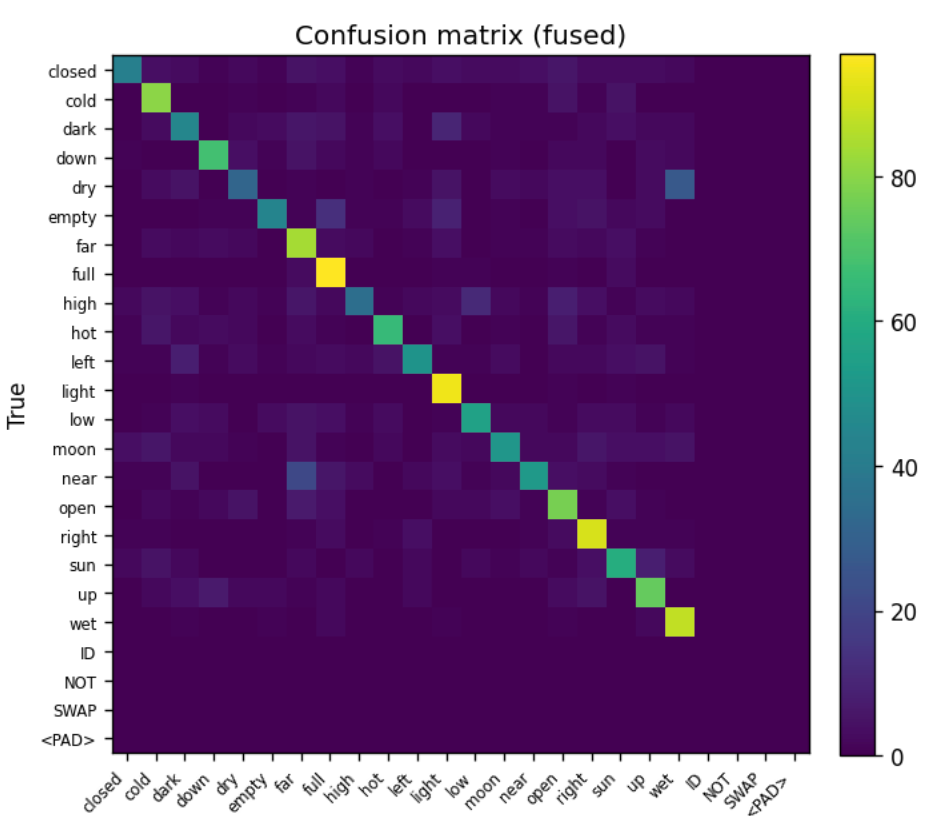

Plots (visual story)

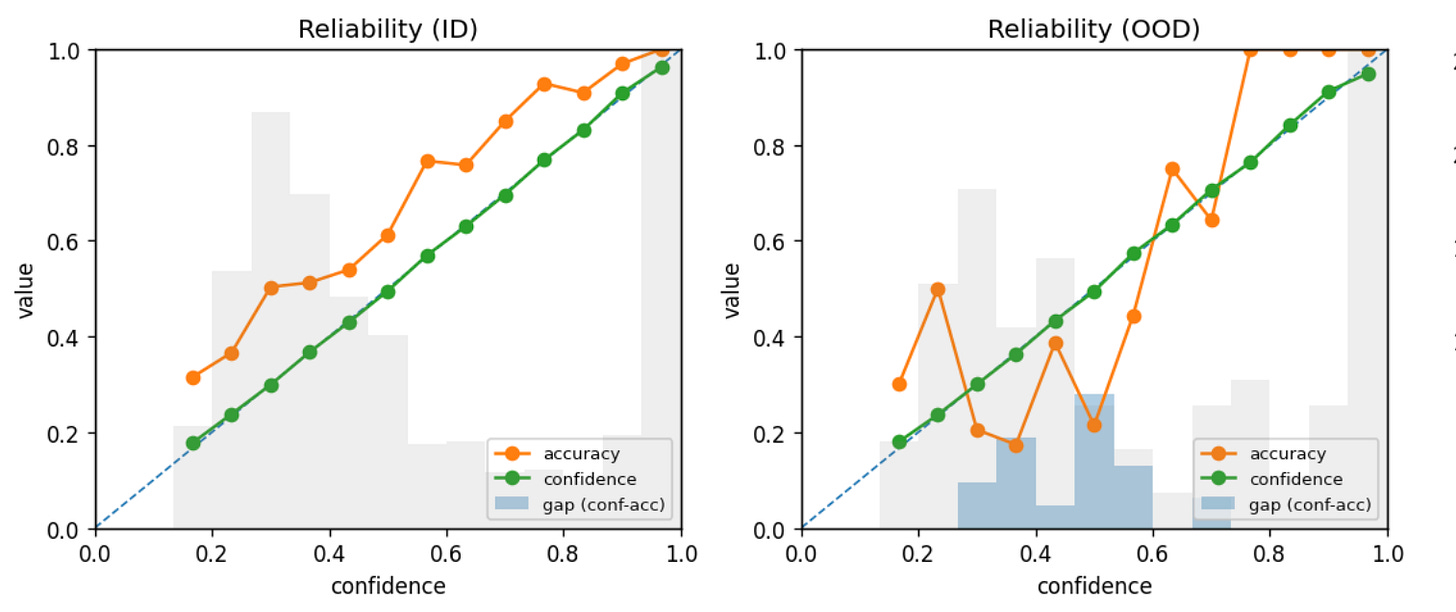

Reliability curves (ID vs OOD) → “Confidence matches accuracy even OOD.”

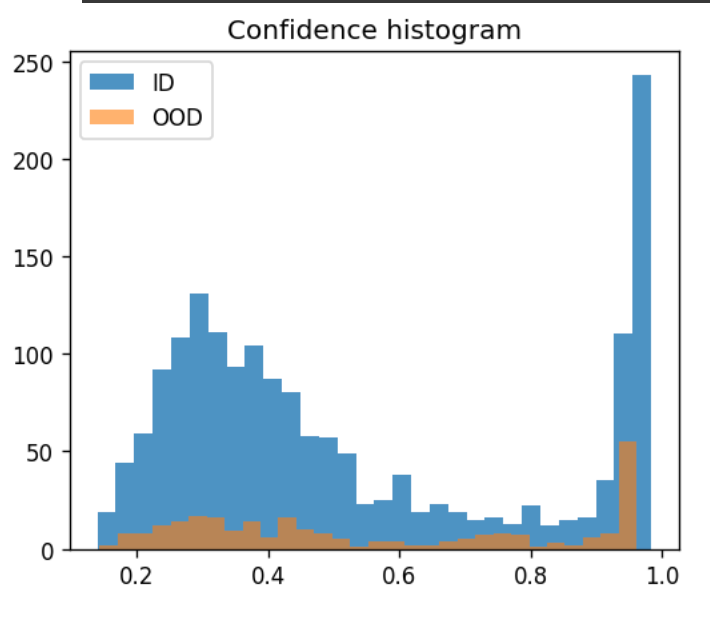

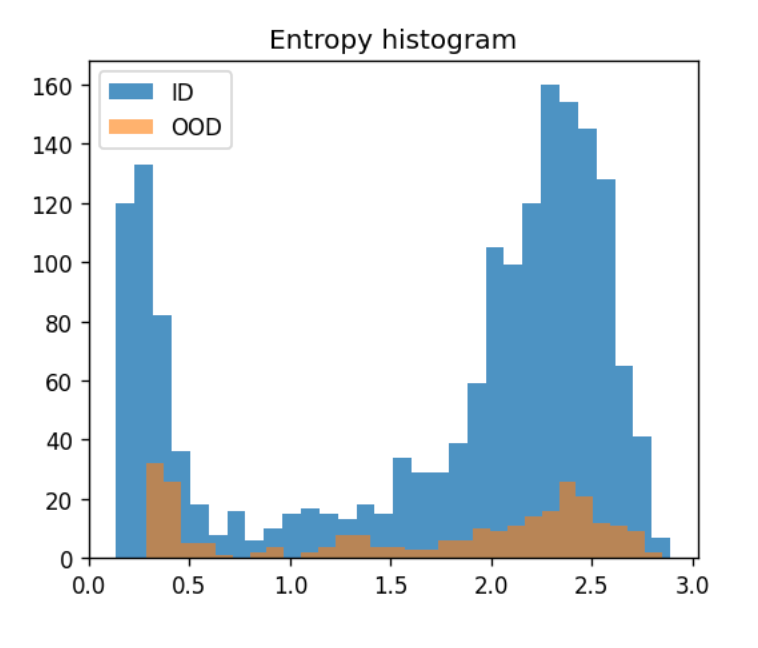

Confidence & entropy histograms (separation between ID/OOD) → “Clearer separation ID vs OO.”

Gate g and β distributions (bounded, interpretable)

Scatter: β vs JS (correct vs wrong separation) → “β override correlates with correctness.”

Confusion matrix (fused predictions, errors diffuse not clustered) → “Errors diffuse, not clustered—safer failure.”

Takeaway

Dual-path = empirical approximation of rotation equation.

Provides robustness under OOD without loss of interpretability.

Negation as counterfactual — “if not X, then Y” — provides a simple, general mechanism for robustness across domains.

Possibility to separate prediction and perception into dual heads, grounding one in values or training signals—making safety a structural feature of the system, not an afterthought.

Small architectural tweak → large gains.

Crux: “Our dual-path design lets models hedge between factual and counterfactual evidence. This rotation improves out-of-distribution robustness, without losing interpretability. Small tweak, big gains.”

Footer

The code in GitHub: https://github.com/andrekramer/chevron/blob/main/dual_attention_heads.py

Co-authored with ChatGPT-5.

What kinds of robustness, reliability, and safety benefits might it confer over standard ML approaches?

Seeking collaborators to explore extensions, theoretical grounding, benchmarks, interpretability, and safety.