A curious Rotation towards Counterfactuals?

A speculative poster by Andre Kramer with ChatGPT-5, andrekramermsc.substack.com

Column 1 – The R (Rotation) Rule

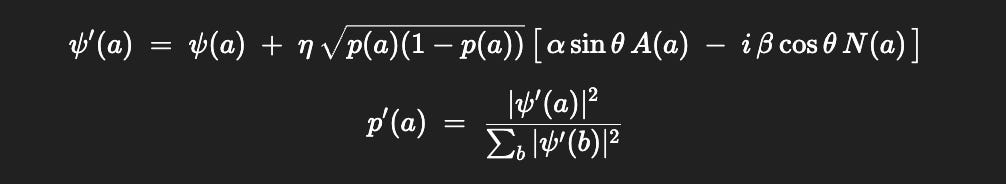

ψ′(a)=ψ(a)+η *sqrt(p(a)(1−p(a))) *[ αsinθA(a)−iβcosθN(a) ], p′(a)=∣ψ′(a)∣^2 / ∑b ∣ψ′(b)∣^2.

p(a)=∣ψ(a)∣^2: probability / belief

A(a): evidence head (bottom-up)

N(a): normative head (top-down)

sqrt(p(1−p)): uncertainty gate

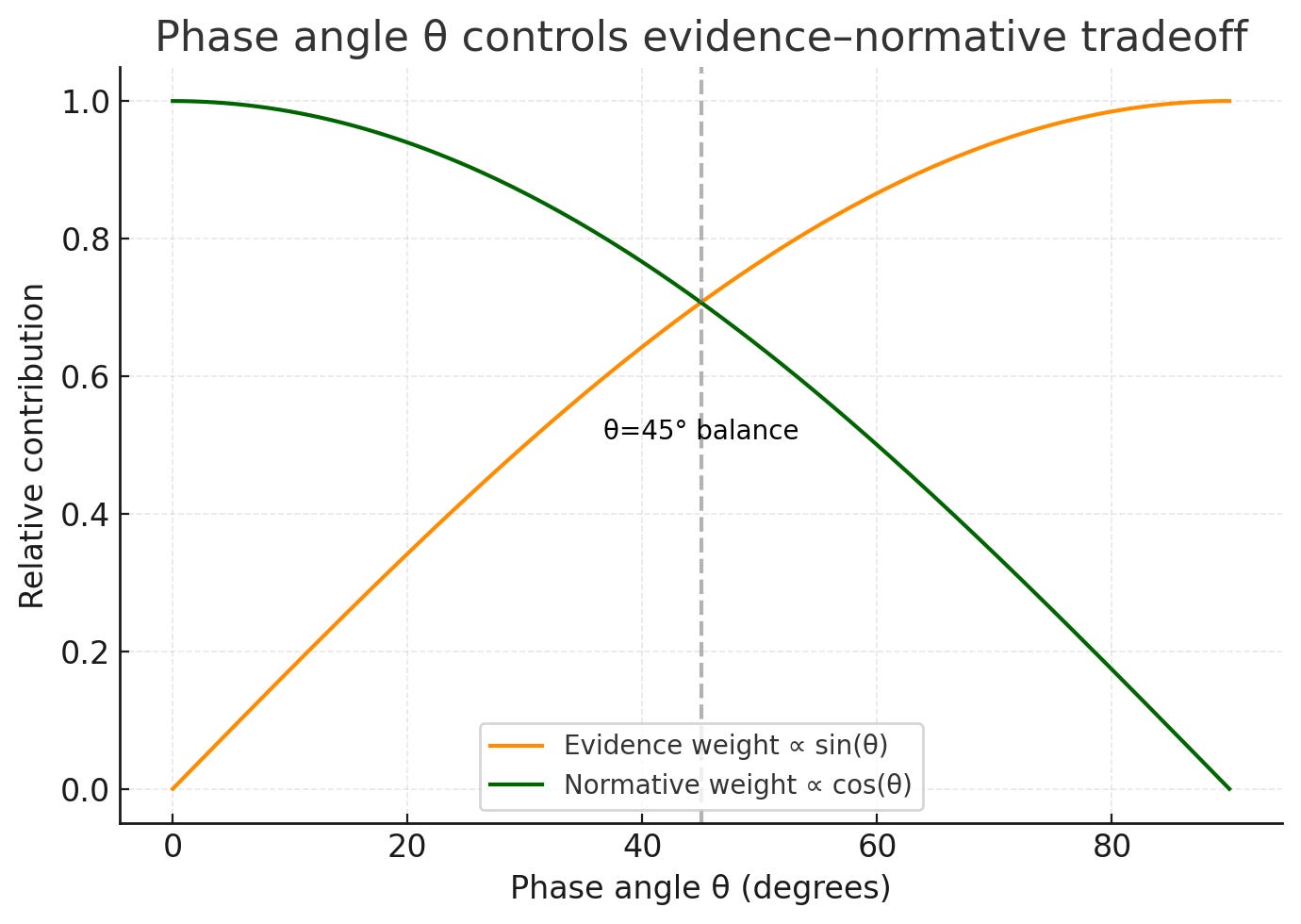

Phase angle θ: controls evidence–value tradeoff

A speculative extension of active inference: dual drives, orthogonal update channels, uncertainty-gated learning.

Column 2 – Three Readings

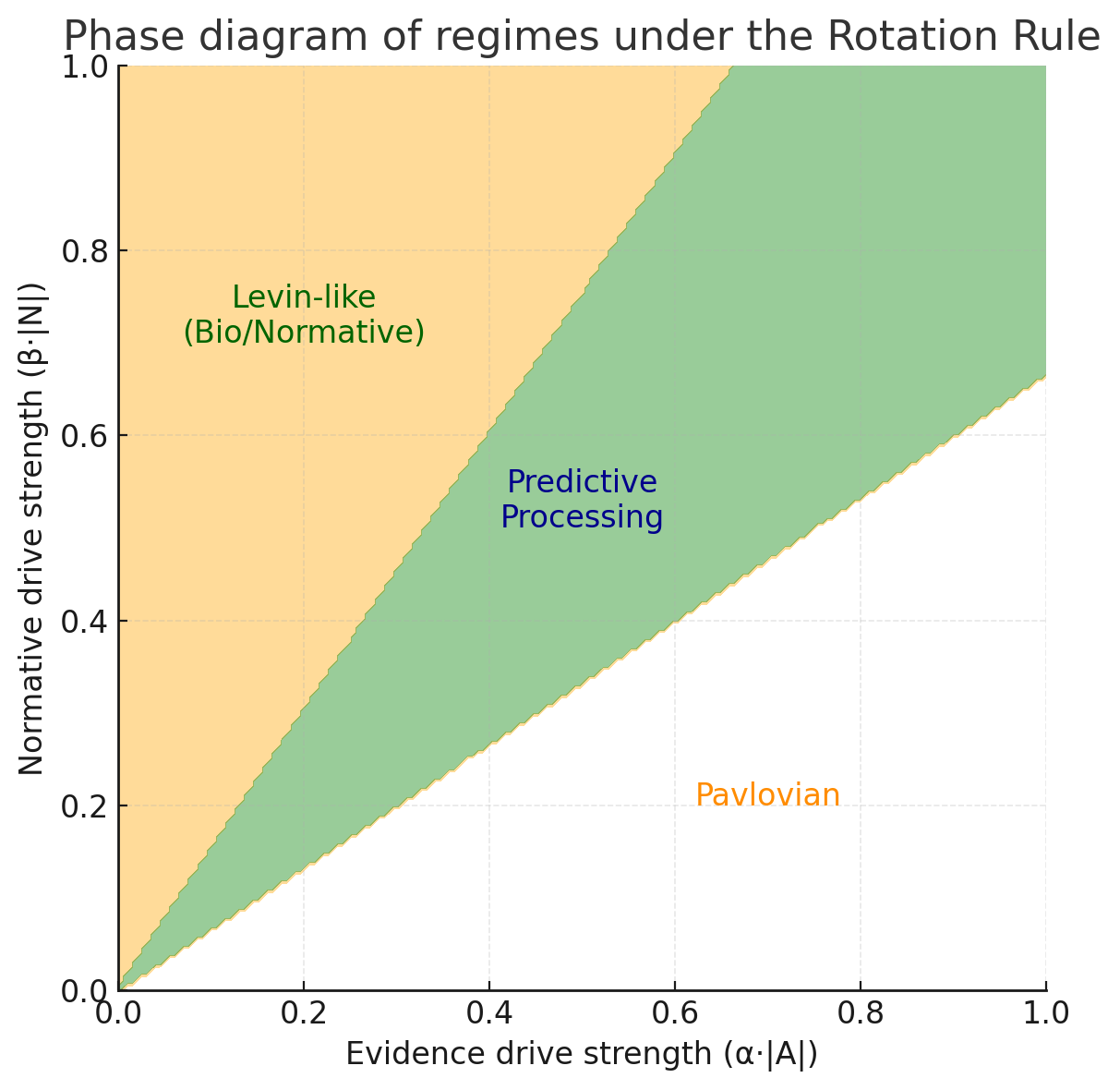

Pavlovian Conditioning

Write → Store → Read → Forget via CS-US pairing.Predictive Processing

Evidence vs. value separation; precision-weighted learning.Levin-Style Networks

Molecular loops with slow traces as emergent memory.

Simulation code and results:

👉 r-3-learners.py

👉 R-rule results

An applications in Transformer Models (Dual Attention Heads):

👉 see separate ML poster.

Column 3 – Broader Resonances

Math echo: amplitude rotations like in QM (but not quantum).

Counterfactuals & negation: the “imaginary” channel encodes alternatives.

Recursion: same rule bridges chemistry/biology, inference, learning (applicable between levels)

Balance: evidence (exploitation) vs. values (exploration) (Actor & Critic)

Like a second-order Bayesian update: uncertainty-gated, dual-headed, phase rotated.

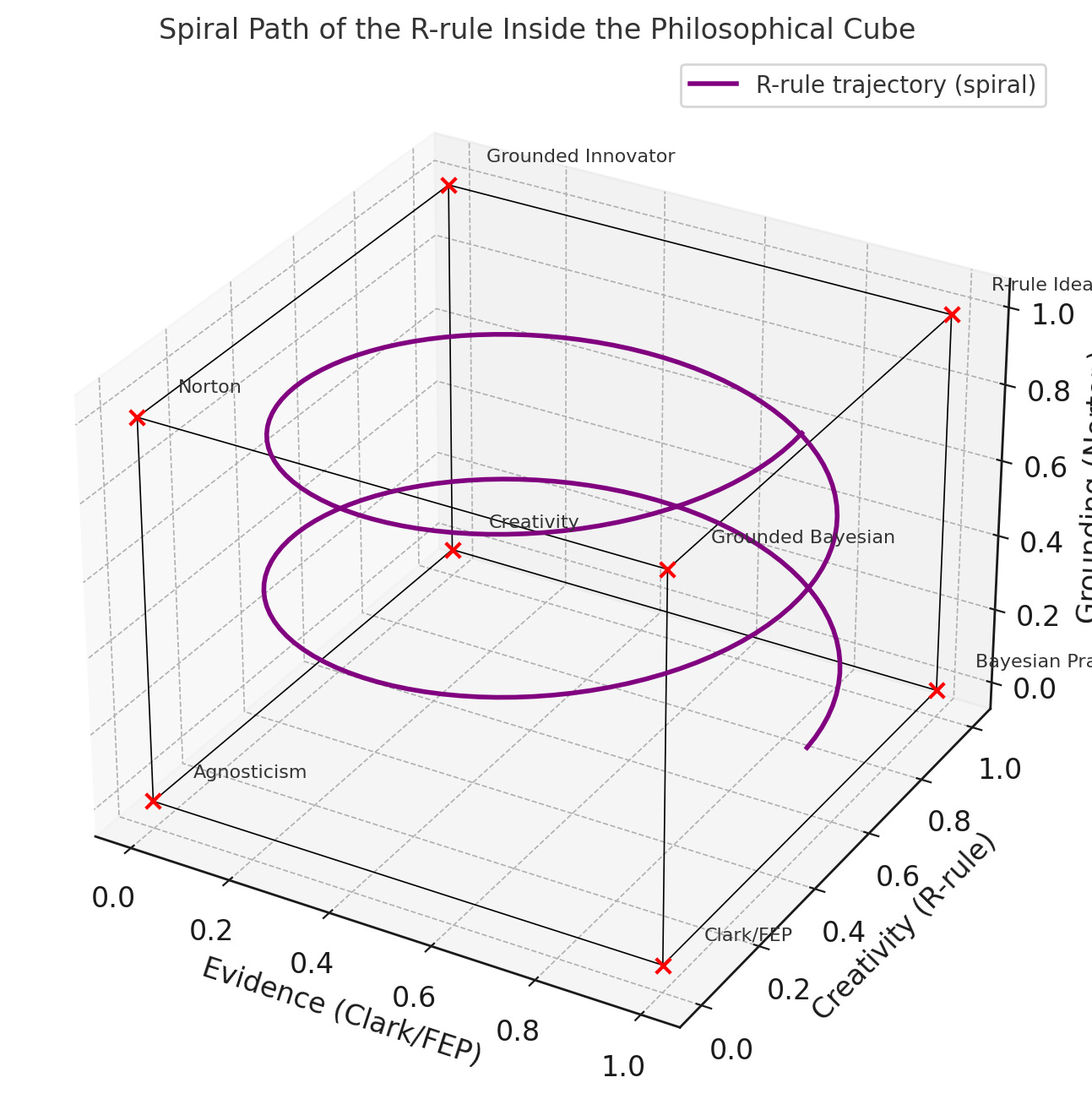

Summary: The R-rule is a Bayesian update extended to include counterfactuals and negations (“if not x, then y”) alongside evidence. Mathematically, it can be written as a double update or expressed as a complex function. It remains compatible with standard log/exp (softmax) updates used in Bayesian schemes such as active inference and deep learning.

Key Takeaway

A system can maintain robustness and adaptability by recursively updating its internal state through the modulation of evidence and norms, gated by uncertainty:

ψ′=ψ + uncertainty(ψ) (sinθ⋅evidence(ψ) − icosθ⋅norms(ψ)),

with probabilities recovered as

p′=∣ψ′∣^2 / ∑b∣ψb′∣^2.

Here, evidence and norms themselves update across levels, enabling a recursive process where uncertainty acts as a structural driver of adaptation.

Open Question: Where could this rule be most useful?

Looking for collaborators to explore concrete applications.