[A somewhat technical post this week that shows how multiple models can be used to build systems that both plan and check for errors or AI safety risks. Feel free to skip this week or skip over the details.]

Agents or agentic (or goal oriented) behaviour have been slated as being the AI trend of 2025. From a safety perspective, I’ve been reluctant to pursue that particular goal ;) but given where we are today with the race to AI being maximally accelerated, I’ve added an agentic loop to Triskelion with AI safety in mind as a counterpoint to what I’m seeing already being deployed.

The loop consists of three models coordinating to update a (currently virtual) world. One model plans (“Plan” in diagram above), another validates that plan (by doing a “Test” of the plan against the world) and a third model acts on the plan to update the world (“Act”).

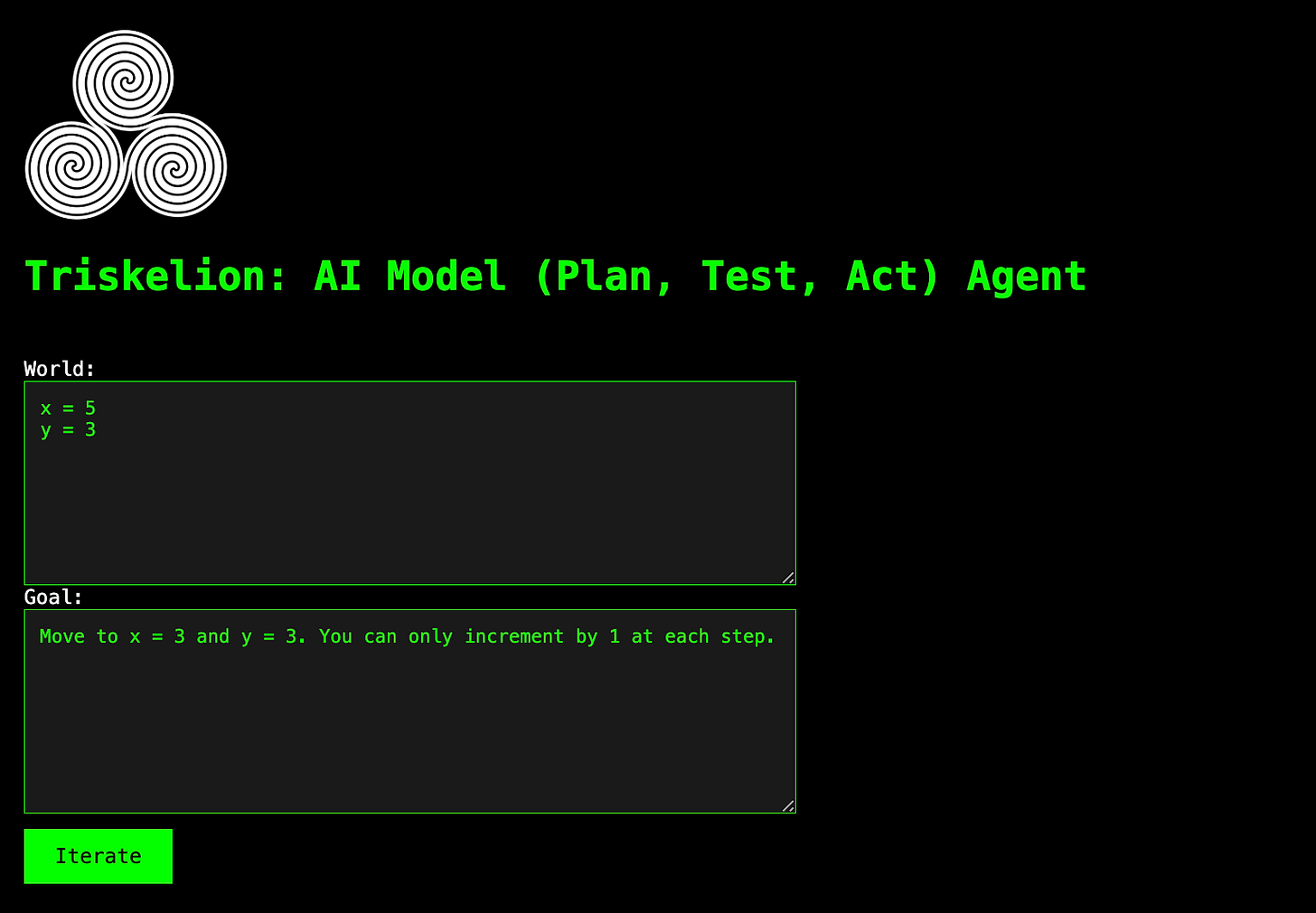

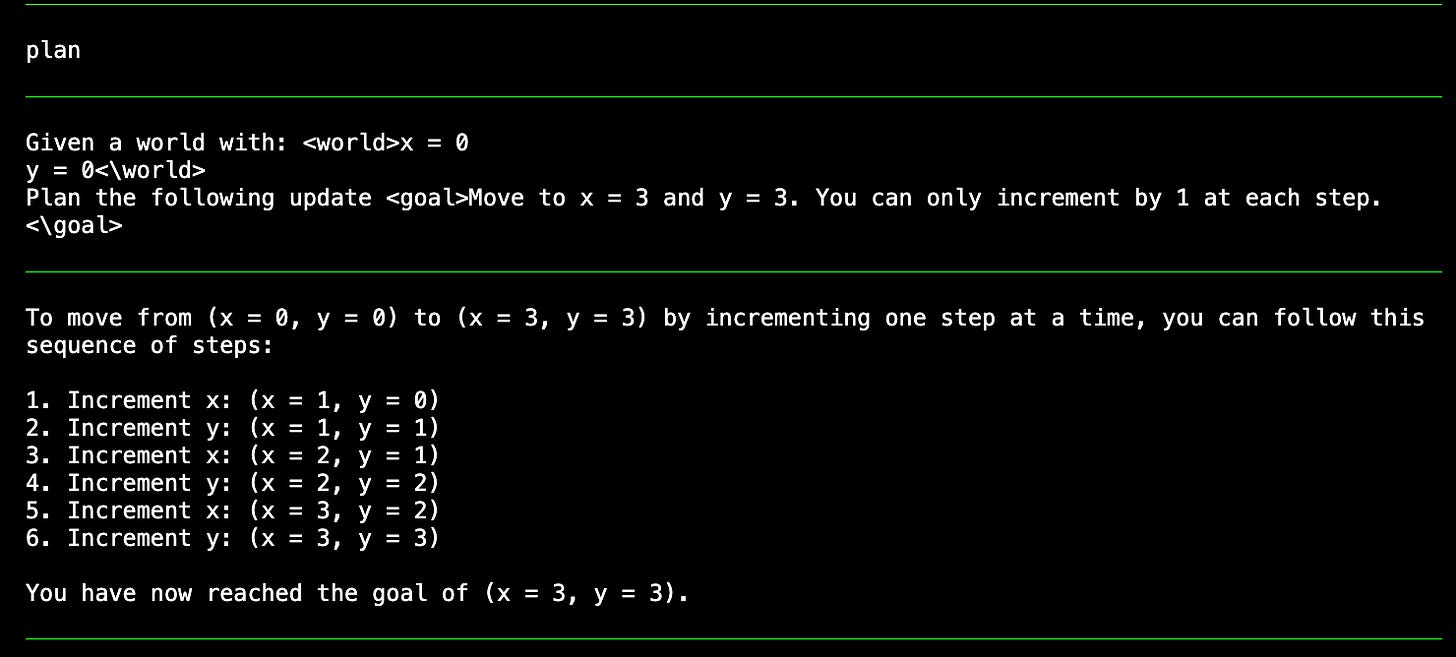

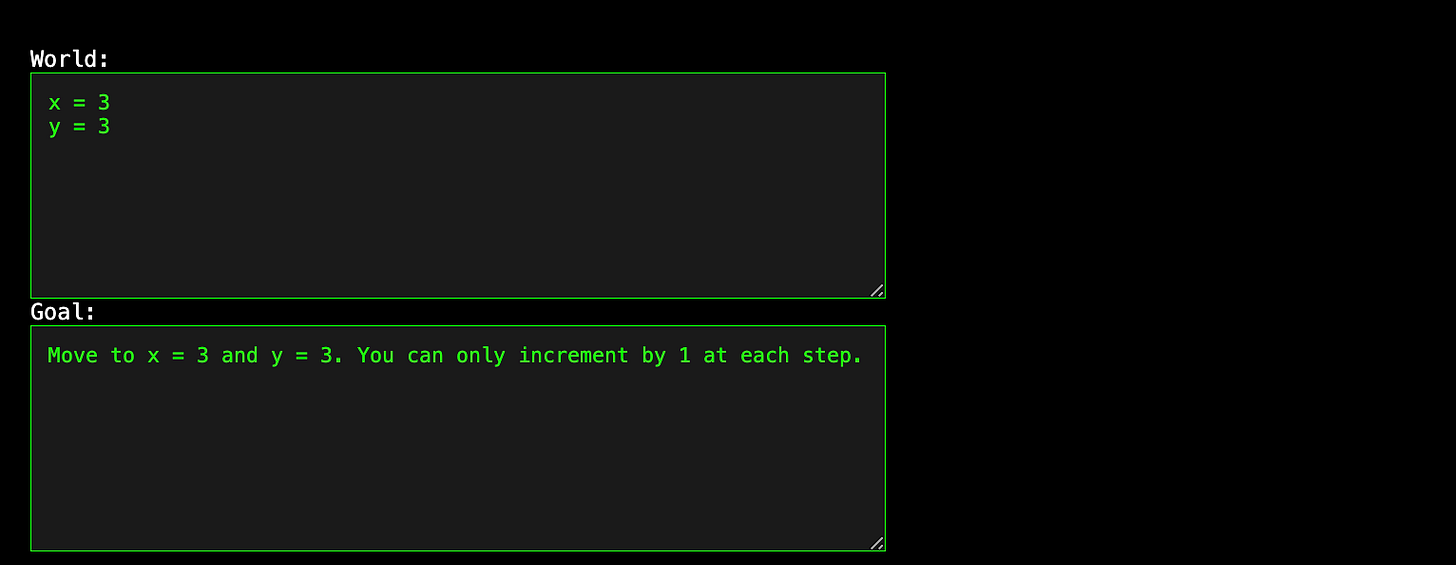

As a toy example, consider a world with a position at x = 0 and y = 0 and a given goal to get to x = 3 and y = 3. A constraint is that you can only move by 1 at each step. With a web browser pointed at http://127.0.0.1:5000/agent and world and goal manually copied into the text boxes:

On clicking “Iterate”, the first model comes up with a plan:

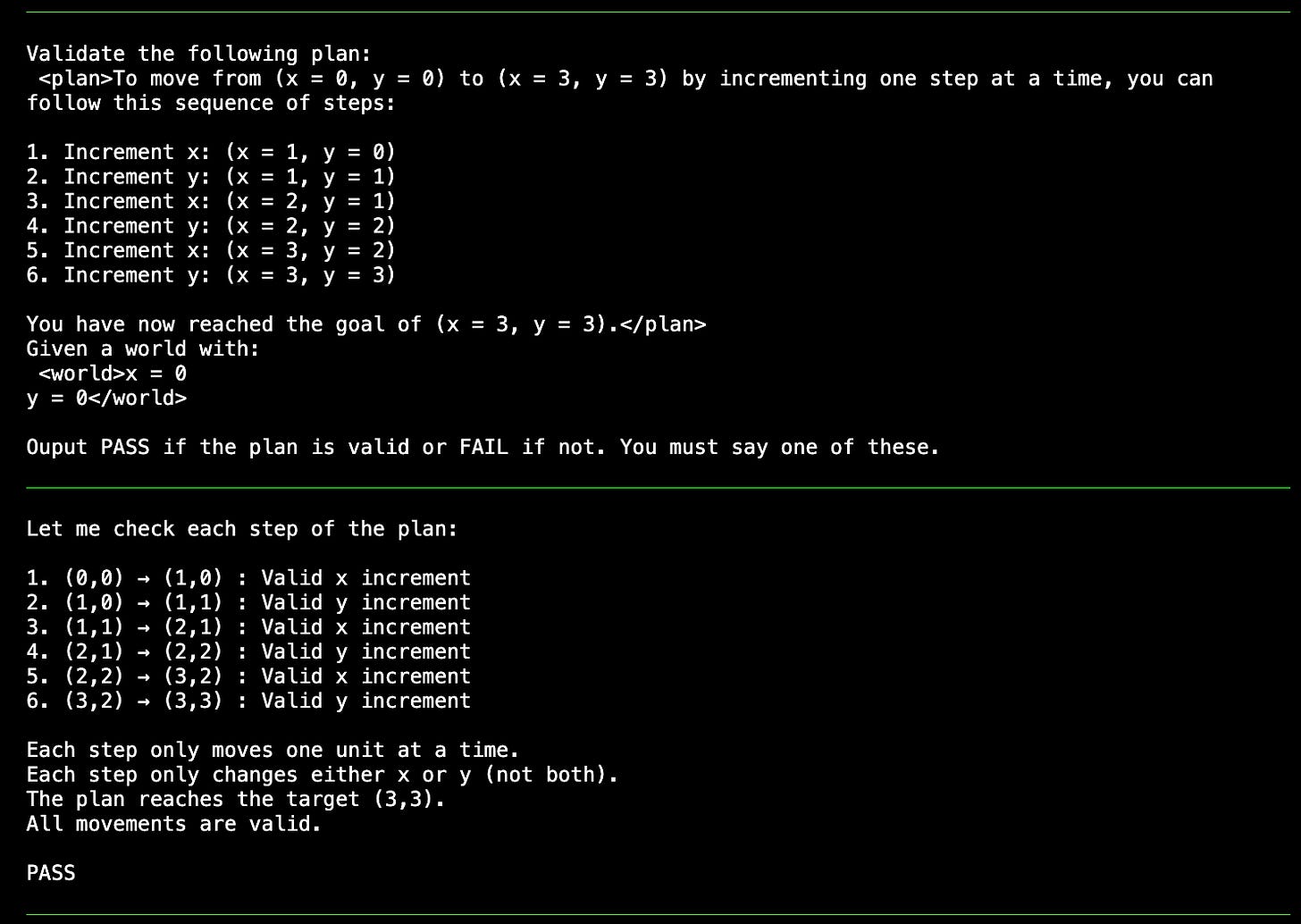

That plan is validated by the second model and PASS is returned in its output:

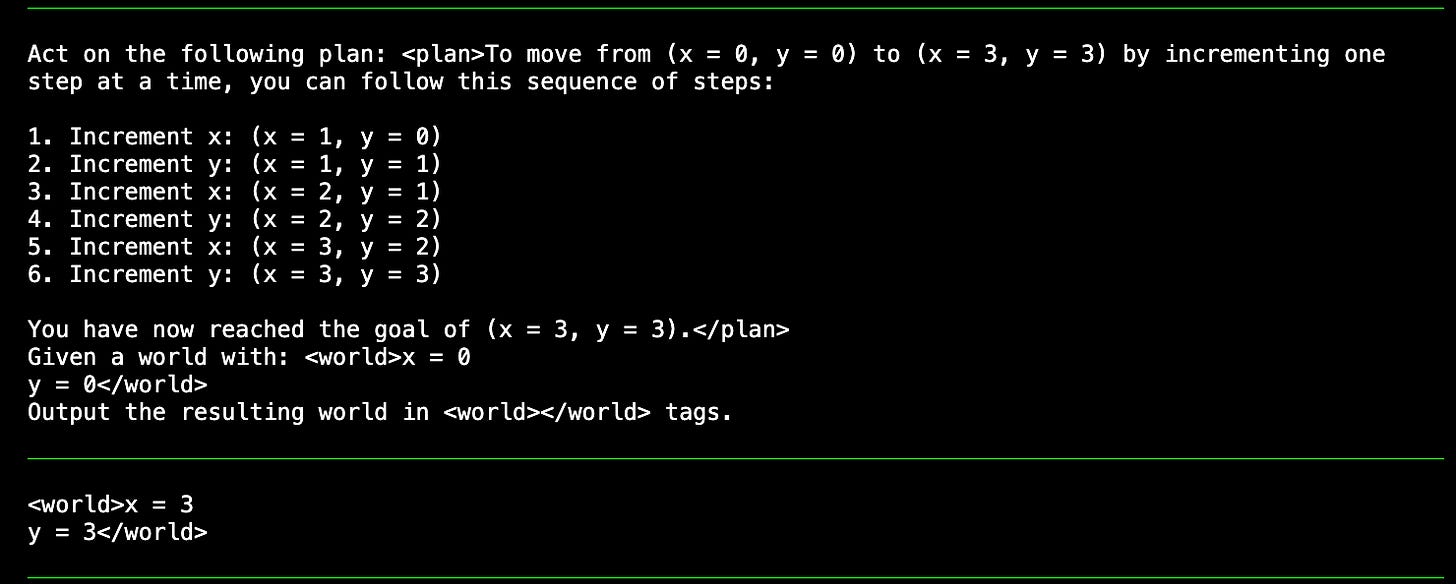

The third model then acts on the (tested) plan:

The results is an updated world with x and y equal to 3 and the goal is reached (in one step):

The world state and the goal could be any text (if multi modal models were supported then images or sounds could be used too). Think of it as giving an agent a task but with the “Agent” internally being a collaboration to update a “world” (which could be a computer’s environment or could even direct a robot).

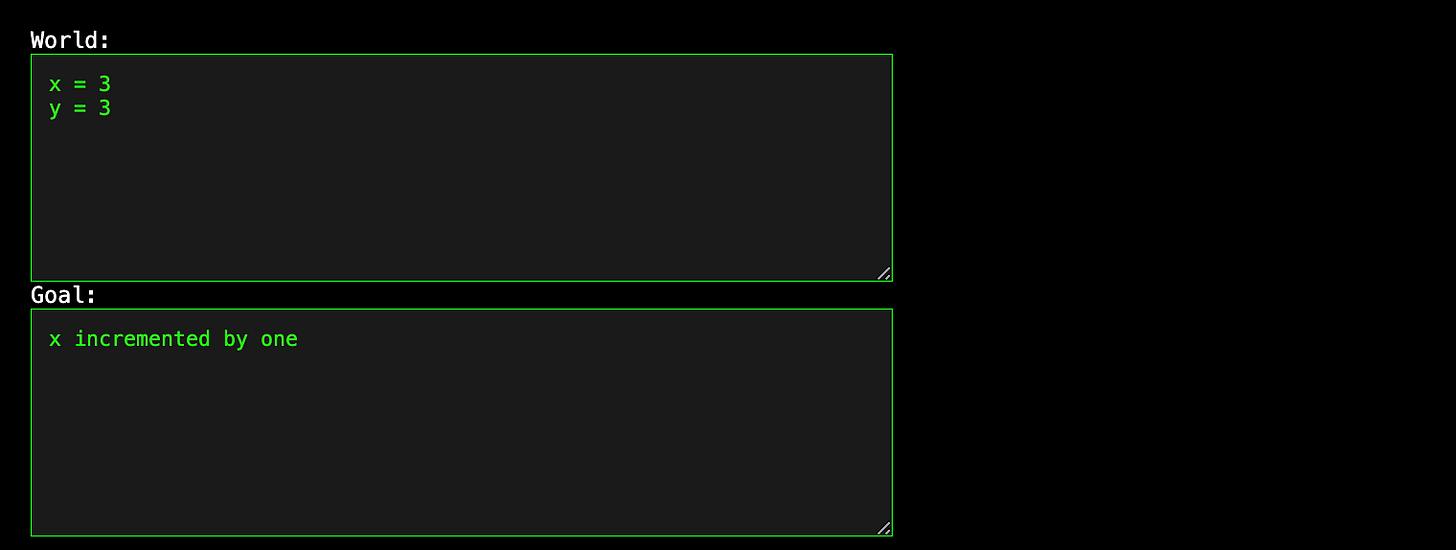

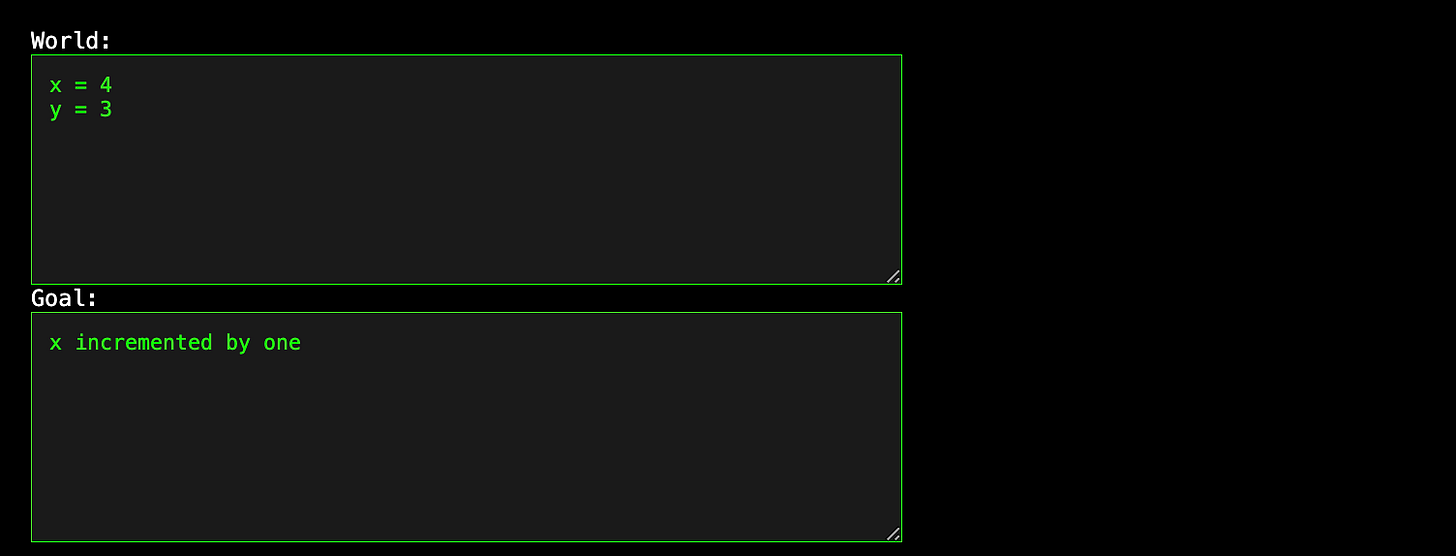

If we change the goal to just say “x incremented by one”:

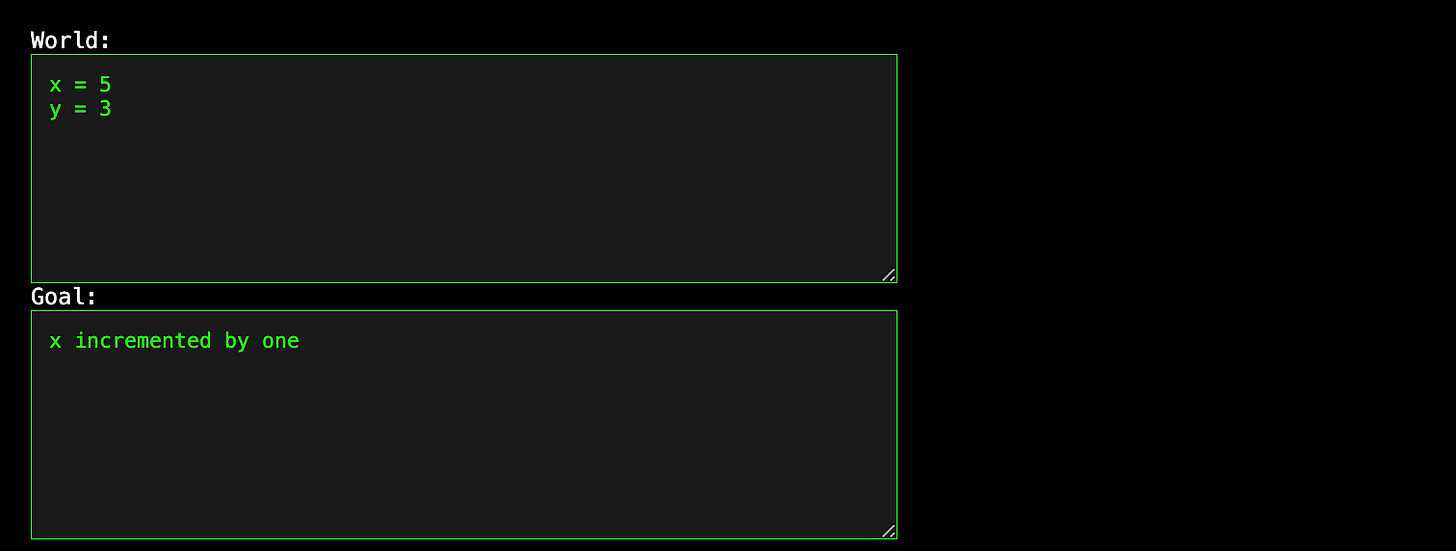

We can then iterate to step x up:

and again:

etc.,

The above was done with OpenAI ChatGTP-4o as Planner, Claud 3.5 Sonnet as Tester and Gemini 2.0 flash Exp as Actor model. The same can be done with a simple command line app run with:

python act.py

If you did this, you (as I am) may be disappointed by the speed of the updates if all models used are remote LLMs from the frontier model providers. You can configure local models or simpler or specialised models could be used to perform actions and this after all is just a demonstration of what will soon be accelerated.

If the Test fails (FAIL or no PASS in output) then the planner is called again. There can be genuine errors either in planning or testing to mask. Also there is no test to check that the update to the world went to plan. The real world can be observed and that view or the resulting virtual world representation is used as the next input to allow the planner to react again and, potentially, come up with a new plan.

The resulting system shows that a second model can be used to validate or verify a model generated plan and even check for different types of unsafe behaviour*. This assumes no collusion between the models and does not give any absolute robustness guarantee.

But in my opinion, such collaborative multi-agent systems are a state of the art way to mitigate the errors and risks inherent in current models and I would recommend their application to any agentic, goal-oriented system being built today.

I’ve recently seen majority voting and multiple model runs being used to improve model performance (usually on benchmarks) and the AI safety community is starting to look to using one model to “police” another. Triskelion is only one example of this trend.

Such schemes are never fool proof. Here’s what OpenAI with reasoning (o3-mini) had to say:

Prompt: Given 3 agents: planner, tester and actor where the planner produces a plan given a goal and a world and the tester checks the plan against the goal and the actor modifies the world. How can an unsafe state still happen?

Reasoned for 13 seconds

Even with three specialized agents—a planner generating a plan, a tester verifying it, and an actor executing it—unsafe states can still arise due to several factors:

Incomplete World Models:

The planner’s model of the world might be simplified or outdated. If critical safety constraints or dynamic elements aren’t accurately represented, the plan—even if it meets the goal in the model—can lead to unsafe outcomes in the real world.

Testing Limitations:

The tester might focus only on whether the plan achieves the stated goal, neglecting broader safety concerns. For example, the tester might check that the plan reaches a numerical target but not assess risks or side effects in the environment.

The simulation or criteria used in testing might omit rare or emergent conditions that can lead to hazards once the plan is executed.

Temporal Gaps and World Changes:

There can be delays between planning, testing, and acting. During these gaps, the world may change (e.g., conditions, resources, or external events), invalidating the assumptions under which the plan was deemed safe.

Execution Errors:

The actor may not implement the plan precisely as intended. Even minor deviations during execution can compound, especially if the plan was finely tuned for safety.

Uncertainty or noise in sensor data and actuator performance can result in actions that push the world into unsafe regions.

Inter-Agent Coordination Issues:

Miscommunication or misalignment between agents—for instance, if the tester’s safety criteria are not fully communicated to the actor—can lead to unintended behaviors.

If each agent works with its own local view of “safety,” the overall system might miss emergent interactions that become hazardous when combined.

In summary, even when each agent appears to be doing its part, mismatches between models, delayed feedback, and execution errors can lead to a situation where the shared world enters an unsafe state.

o3-mini

Thanks for reading,

Andre

* One important use case is where the plan that is generated is code (in some high level language such as Python) and the Tester analyses that code is some way (e.g. generate and run unit tests) and the “act” model deploys that code to some production environment.

I've added an analysis from OpenAI ChatGPT (o3-mini) pointing out what this scheme does not cover. You can also ask an LLM about the advantages of this scheme compared to a monolithic agent where all the safety guarantees are within the one model. I think this is a good way to motivate AI safety by using AI!